Showing posts from 2016Show All

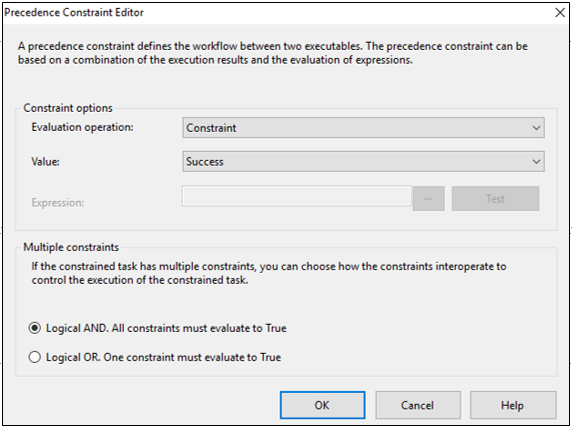

Working with Precedence Constraints in SQL Server Integration Services

Vipul

13:12

Precedence Constraints are useful to define workflow in a sequential man…

Read moreHow to check if a SQL Server Agent job is running

Vipul

23:22

I was getting an error that "SQLServerAgent Error: Request to run job <…

Read moreDownload a data file from a Claims based authentication SharePoint 2010 or SharePoint 2013 site programmatically using C# programming language.

Vipul

00:16

It was required to download few data files from a SharePoint site which is us…

Read moreSSIS - How To Load Multiple Files ( .txt or .csv or .excel ) To a Table in SQL server ?

Vipul

14:49

Overview Data upload from multiple files formats into one destination table …

Read moreRecent Posts

3/recent/post-list

Created By eaadhar | Distributed By Blogger Themes